When the 9th Circuit Courtroom of Appeals thought to be a lawsuit in opposition to Google in 2020, Pass judgement on Ronald M. Gould said his view of the tech massive’s most important asset bluntly: “So-called ‘impartial’ algorithms,” he wrote, will also be “reworked into fatal missiles of destruction by means of ISIS.”

In step with Gould, it was once time to problem the limits of somewhat snippet of the 1996 Communications Decency Act referred to as Phase 230, which protects on-line platforms from legal responsibility for the issues their customers publish. The plaintiffs on this case, the circle of relatives of a tender lady who was once killed right through a 2015 Islamic State assault in Paris, alleged that Google had violated the Anti-terrorism Act by means of permitting YouTube’s advice gadget to advertise terrorist content material. The algorithms that amplified ISIS movies have been a threat in and of themselves, they argued.

Gould was once within the minority, and the case was once determined in Google’s choose. However even the bulk cautioned that the drafters of Phase 230—folks whose conception of the Global Extensive Internet would possibly were restricted to the likes of electronic mail and the Yahoo homepage—by no means imagined “the extent of class algorithms have accomplished.” The bulk wrote that Phase 230’s “sweeping immunity” was once “most likely premised on an antiquated working out” of platform moderation, and that Congress must rethink it. The case then headed to the Best Courtroom.

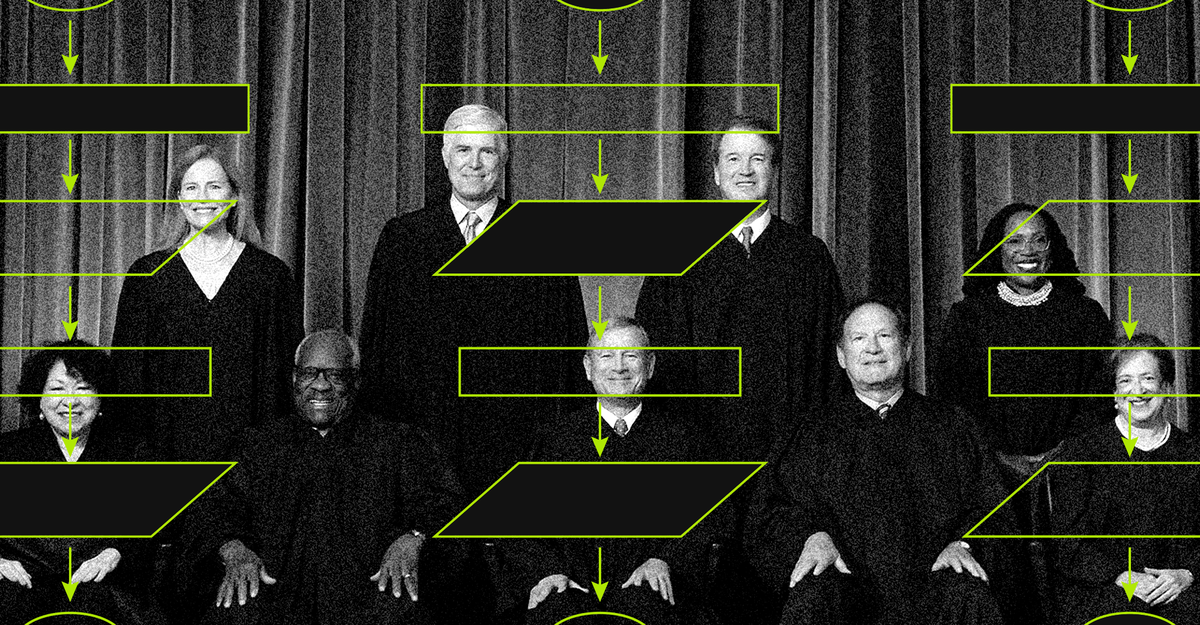

This month, the rustic’s very best court docket will believe Phase 230 for the primary time because it weighs a couple of instances—Gonzalez v. Google, and some other in opposition to Twitter—that invoke the Anti-terrorism Act. The justices will search to resolve whether or not on-line platforms must be held responsible when their advice methods, working in ways in which customers can’t see or perceive, help terrorists by means of selling their content material and connecting them to a broader target market. They’ll believe the query of whether or not algorithms, as creations of a platform like YouTube, are one thing distinct from another side of what makes a web site a platform that may host and provide third-party content material. And, relying on how they solution that query, they might turn out to be the web as we lately are aware of it, and as some folks have recognized it for his or her whole lives.

The Best Courtroom’s collection of those two instances is sudden, since the core factor turns out so clearly settled. Within the case in opposition to Google, the appellate court docket referenced a an identical case in opposition to Fb from 2019, relating to content material created by means of Hamas that had allegedly inspired terrorist assaults. The 2nd Circuit Courtroom of Appeals determined in Fb’s choose, even if, in a partial dissent, then–Leader Pass judgement on Robert Katzmann admonished Fb for its use of algorithms, writing that the corporate must believe no longer the usage of them in any respect. “Or, wanting that, Fb may just alter its algorithms to prevent them introducing terrorists to each other,” he prompt.

In each the Fb and Google instances, the courts additionally reference a landmark Phase 230 case from 2008, filed in opposition to the web site Roommates.com. The web page was once discovered accountable for encouraging customers to violate the Truthful Housing Act by means of giving them a survey that requested them whether or not they most well-liked roommates of positive races or sexual orientations. Through prompting customers on this approach, Roommates.com “advanced” the guidelines and thus immediately led to the criminal activity. Now the Best Courtroom will review whether or not an set of rules develops knowledge in a in a similar way significant approach.

The wide immunity defined by means of Phase 230 has been contentious for many years, however has attracted particular consideration and larger debate previously a number of years for more than a few causes, together with the Giant Tech backlash. For each Republicans and Democrats in the hunt for a solution to take a look at the ability of web corporations, Phase 230 has turn into an interesting goal. Donald Trump sought after to eliminate it, and so does Joe Biden.

In the meantime, American citizens are expressing harsher emotions about social-media platforms and feature turn into extra articulate within the language of the eye economic system; they’re conscious about the imaginable radicalizing and polarizing results of web sites they used to believe a laugh. Non-public-injury court cases have cited the ability of algorithms, whilst Congress has thought to be efforts to control “amplification” and compel algorithmic “transparency.” When Frances Haugen, the Fb whistleblower, gave the impression prior to a Senate subcommittee in October 2021, the Democrat Richard Blumenthal remarked in his opening feedback that there was once a query “as as to whether there may be this kind of factor as a secure set of rules.”

Regardless that rating algorithms, equivalent to the ones utilized by search engines like google and yahoo, have traditionally been safe, Jeff Kosseff, the creator of a e-book about Phase 230 referred to as The Twenty-Six Phrases That Created the Web, advised me he understands why there may be “some temptation” to mention that no longer all algorithms must be coated. Every now and then algorithmically generated suggestions do serve damaging content material to folks, and platforms haven’t all the time completed sufficient to stop that. So it will really feel useful to mention one thing like You’re no longer accountable for the content material itself, however you might be liable in the event you assist it move viral. “However in the event you say that, then what’s the opposite?” Kosseff requested.

Possibly you must get Phase 230 immunity provided that you place each unmarried piece of content material for your web site in exact chronological order and not let any set of rules contact it, kind it, prepare it, or block it for any reason why. “I believe that may be a sexy dangerous result,” Kosseff mentioned. A web page like YouTube—which hosts thousands and thousands upon thousands and thousands of movies—would more than likely turn into functionally unnecessary if touching any of that content material with a advice set of rules may just imply risking prison legal responsibility. In an amicus transient filed in improve of Google, Microsoft referred to as the speculation of taking out Phase 230 coverage from algorithms “illogical,” and mentioned it could have “devastating and destabilizing” results. (Microsoft owns Bing and LinkedIn, either one of which make in depth use of algorithms.)

Robin Burke, the director of That Recommender Methods Lab on the College of Colorado at Boulder, has a an identical factor with the case. (Burke was once a part of a professional team, arranged by means of the Heart for Democracy and Generation, that filed some other amicus transient for Google.) Remaining yr, he co-authored a paper on “algorithmic hate,” which dug into imaginable reasons for common loathing of suggestions and rating. He equipped, for instance, Elon Musk’s 2022 declaration about Twitter’s feed: “You might be being manipulated by means of the set of rules in tactics you don’t understand.” Burke and his co-authors concluded that consumer frustration and worry and algorithmic hate might stem partially from “the lack of understanding that customers have about those advanced methods, evidenced by means of the monolithic time period ‘the set of rules,’ for what are actually collections of algorithms, insurance policies, and procedures.”

Once we spoke just lately, Burke emphasised that he doesn’t deny the damaging results that algorithms could have. However the method prompt within the lawsuit in opposition to Google doesn’t make sense to him. For something, it means that there’s something uniquely dangerous about “centered” algorithms. “A part of the issue is that that time period’s no longer in point of fact outlined within the lawsuit,” he advised me. “What does it imply for one thing to be centered?” There are a large number of issues that most of the people do wish to be centered. Typing locksmith right into a seek engine wouldn’t be sensible with out concentrated on. Your good friend suggestions wouldn’t make sense. You possibly can more than likely finally end up paying attention to a large number of track you hate. “There’s no longer in point of fact a excellent position to mention, ‘K, that is on one facet of the road, and those different methods are at the different facet of the road,’” Burke mentioned. Extra importantly, platforms additionally use algorithms to search out, cover, and reduce damaging content material. (Kid-sex-abuse subject matter, as an example, is frequently detected thru automatic processes that contain advanced algorithms.) With out them, Kosseff mentioned, the web could be “a crisis.”

“I used to be in point of fact stunned that the Best Courtroom took this situation,” he advised me. If the justices sought after a possibility to rethink Phase 230 somehow, they’ve had various the ones. “There were different instances they denied that may were higher applicants.” For example, he named a case filed in opposition to the relationship app Grindr for allegedly enabling stalking and harassment, which argued that platforms must be accountable for essentially dangerous product options. “It is a actual Phase 230 dispute that the courts aren’t constant on,” Kosseff mentioned. The Grindr case was once unsuccessful, however the 9th Circuit was once satisfied by means of a an identical argument made by means of plaintiffs in opposition to Snap in regards to the deaths of 2 17-year-olds and a 20-year-old, who have been killed in a automobile crash whilst the usage of a Snapchat clear out that presentations how briskly a automobile is transferring. Some other case alleging that the “communicate to strangers” app Omegle facilitated the intercourse trafficking of an 11-year-old lady is within the discovery segment.

Many instances arguing {that a} connection exists between social media and particular acts of terrorism also are brushed aside, as it’s laborious to end up an immediate hyperlink, Kosseff advised me. “That makes me suppose this is more or less an unusual case,” he mentioned. “It virtually makes me suppose that there have been some justices who in point of fact, in point of fact sought after to listen to a Phase 230 case this time period.” And for one reason why or some other, those they have been maximum occupied with have been those in regards to the culpability of that mysterious, misunderstood fashionable villain, the omnipotent set of rules.

So the set of rules will quickly have its day in court docket. Then we’ll see whether or not the way forward for the internet will probably be messy and complicated and every now and then unhealthy, like its provide, or utterly absurd and truthfully roughly not possible. “It might take a mean consumer roughly 181 million years to obtain all information from the internet these days,” Twitter wrote in its amicus transient supporting Google. An individual might suppose she needs to look the entirety, so as, untouched, however she in point of fact, in point of fact doesn’t.